Our license allows for broad commercial use as well as for developers to create and redistribute additional work on top of Llama 2. If on the Llama 2 version release date the monthly active users of the products or services made available. Open source free for research and commercial use Were unlocking the power of these large language models Our latest version of Llama Llama 2. July 18 2023 4 min read 93 SHARES 68K READS Meta and Microsoft announced an expanded artificial intelligence partnership with the. Metas license for the LLaMa models and code does not meet this standard Specifically it puts restrictions on commercial use for..

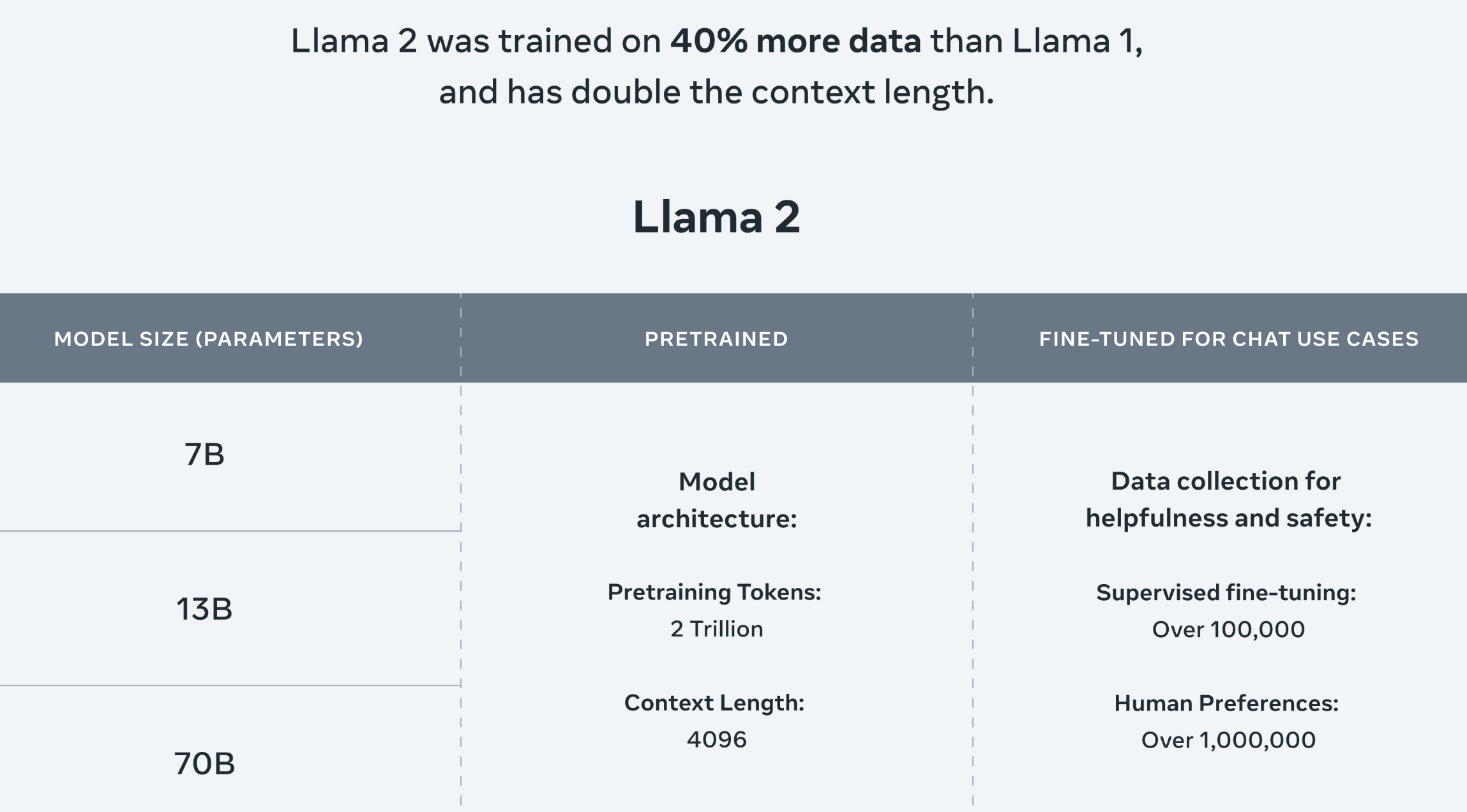

This release includes model weights and starting code for pretrained and fine-tuned Llama language models ranging from 7B to 70B parameters This repository is intended as a minimal. January 2024 llama2-70b has no activity yet for this period Show more activity Seeing something unexpected Take a look at the GitHub profile guide. We release the resources associated with QLoRA finetuning in this repository under MIT license In addition we release the Guanaco model family for base LLaMA model sizes of 7B 13B 33B and 65B. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B pretrained model. Blog Discord GitHub Models Sign in Download llama2 Llama 2 is a collection of foundation language models ranging from 7B to 70B parameters 3553K Pulls Updated 2 hours ago..

Machine Economy Press Substack

This dataset contains chunked extracts of 300 tokens from papers related to and including the Llama 2 research paper Related papers were identified by following a trail of references. Open Foundation and Fine-Tuned Chat Models In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging. NORB is completely trained within fve epochs Test error rates on MNIST drop to 242 097 and 048 after 1 3 and 17 epochs respectively. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Open Foundation and Fine-Tuned Chat Models Published on Jul 18 2023 Featured in Daily Papers on Jul 18 2023 Authors Hugo Touvron Louis Martin..

Chat with Llama 2 70B Clone on GitHub Customize Llamas personality by clicking the settings button I can explain concepts write poems and code. Llama 2 models are trained on 2 trillion tokens and have double the context length of Llama 1 Llama Chat models have additionally been trained on over. Aurelien Rodriguez Guillaume WenzekFrancisco Guzmán In this work we develop and release Llama 2 a collection of pretrained and fine. We have a broad range of supporters around the world who believe in our open approach to todays AI companies that have given early feedback and are. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the..

تعليقات